This is a follow up topic for https://fabscan.org/community/show-your-scans/test-scans-with-ciclop-fabscan

That looks really impressive, thank you so much for sharing this! I think you are the first user who shows ciclop results. The ciclop support is still in early an early alpha stage.

The calibration was a bit challenging, but the scans look pretty impressive for the setup.

Can you share some pictures of your hardware setup and tell us something about your calibration experience. What are the challenging things ... ?

I think there is even room for improvements like simplifying the calibration or speeding up the scans by at least 10x without compromising quality

Yes there is room for improvements! Have you already improved something to obtain this results? At the moment I am working on python 3 migrations. And with that i am also trying to improve the speed. But if you have some suggestions feel free to share your thoughts.

In case these improvements are of interest for someone I can share it here.

Appreciate it!

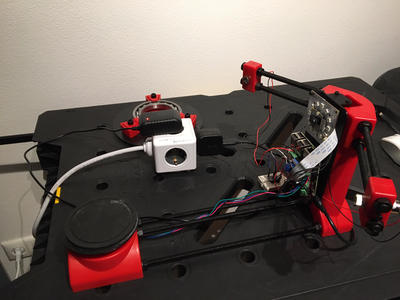

The setup is using the FabScan shield to support the LEDs and the new TMC stepper controller. It also uses a striped down version of the turn table removing the heavy weight from the oversized original bearing.

Looking at the current calibration I identified a couple of potential issues:

- The checkerboard poses are usually not sufficient for a precise camera calibration. Here at least two additional poses should be added which are not derived from the turntable motion.

- The difference between both 3D profiles used for laser plane estimation is pretty small and should use the maximal possible distance by adapting checkerboard poses.

- The center of the turntable is estimated by using support points covering only around 1/3 of a circle. This leads to extrapolation amplifying the measurement error.

Some possible improvements I can think of in the context of FabScan:

- Use of additional poses for precise auto calibration.

- The pi supports very precise interrupts in the usec range running on the GPU. This allows to timestamp the STEP impulses sent to the motor controller and correlate it with the camera images. This should allow to do a full scan in around 180seconds with maximal micro step resolution.

- The Pi camera supports raw access which allows to run the laser extraction before de-bayering and jpeg compression. This significantly increase the sub-pixel resolution. Together with a decent sub-pixel peak detector around 1/10 of a pixel should be reachable.

From a different project, I have already some c++ code supporting raw access of the pi camera with interrupt handling and turntable calibration. This also includes some code related to camera calibration which is now part of opencv4.

Thank you for sharing your thoughts. Your proposals are good!

The checkerboard poses are usually not sufficient for a precise camera calibration. Here at least two additional poses should be added which are not derived from the turntable motion.

I know and i am still thinking on how i can archive that without too much user interaction. But i think there must be one or more additional steps where the user needs to reposition the calibration sheet. But we are working on a new UI and i don't want to invest more time on the old one. The new UI is started from scratch. I think i can already start adding this to the backend.

The difference between both 3D profiles used for laser plane estimation is pretty small and should use the maximal possible distance by adapting checkerboard poses.

I will have a closer look to that.

The center of the turntable is estimated by using support points covering only around 1/3 of a circle. This leads to extrapolation amplifying the measurement error.

Thats correct. Something wat i changed a couple of month ago. I think i need to reverse this.

The pi supports very precise interrupts in the usec range running on the GPU. This allows to timestamp the STEP impulses sent to the motor controller and correlate it with the camera images. This should allow to do a full scan in around 180seconds with maximal micro step resolution.

Have to think about that. However if i have understood correctly this will inverse the current process. Until now the scan processor waits until an image is processed and afterwards it turns to the next position and processes the next image and so on... The "motor controller" is triggering the image processing. With your suggestion the image process will trigger the motor event?

One of the time consuming problems is that i added a sleep time after the laser is turned on ( only for the two laser mode ). Because i found out that it takes a while until the laser is available on the camera image. I have not identified the lacking part in the software.

I am also thinking to use the circle queue only for the preview in the settings dialog. And switching to a direct image capturing while the scan process. Maybe that is also a good beginning to work on the raw image processing You mentioned with

The Pi camera supports raw access which allows to run the laser extraction before de-bayering and jpeg compression. This significantly increase the sub-pixel resolution. Together with a decent sub-pixel peak detector around 1/10 of a pixel should be reachable.

Getting raw access to the camera is not a big deal because the picamera lib already provides that.

https://picamera.readthedocs.io/en/release-1.13/recipes2.html#raw-bayer-data-captures

From a different project, I have already some c++ code supporting raw access of the pi camera with interrupt handling and turntable calibration. This also includes some code related to camera calibration which is now part of opencv4.

The interrupt part would be very interesting. As i already mentioned in the post before is upgrading to python 3 one of the most important steps now. Combined with that i need to add a new version of opencv. I need to recompile the Raspbian packages because the default packages delivered with Raspbian are not able to manage multiprocessing ( not compiled with tbb/openmp support). I am thinking of switching to opencv4 but i am not sure if it is as good optimized as opencv3 due to the fact that it is really new. I think i need to do some comparison testing on both.

Back to the speed up topic i came to the conclusion that the most time consuming process is extracting the laser line in the image. I did some investigation on how to speed up this process. What i found out it that pythons multiprocessing can be a bottle neck and it might be improved by using Ray. ( https://github.com/ray-project/ray )

Have to think about that. However if i have understood correctly this will inverse the current process. Until now the scan processor waits until an image is processed and afterwards it turns to the next position and processes the next image and so on... The "motor controller" is triggering the image processing. With your suggestion the image process will trigger the motor event?

Ideally yes, but a vsync is not supported in raw mode because it is generated on the GPU rather than the camera. However, due to the rolling shutter the benefit is rather small and you can use a free running servo and camera if you know the timing of both.

Back to the speed up topic i came to the conclusion that the most time consuming process is extracting the laser line in the image. I did some investigation on how to speed up this process. What i found out it that pythons multiprocessing can be a bottle neck and it might be improved by using Ray. ( https://github.com/ray-project/ray )

I did some testing a couple of months ago and I was able to do 40fps line laser extraction with a PI4. The goal is to write new software for the Ciclop taking full advantage of the PI4 and its camera V2 which is pretty good and can match industrial cameras for a fraction of the costs.

The interrupt part would be very interesting. As i already mentioned in the post before is upgrading to python 3 one of the most important steps now. Combined with that i need to add a new version of opencv.

My planned software structure is:

- Pi backend:

- C++ / OpenCV4

- gRPC for language bindings to python

- web-interface:

- https://dash-gallery.plotly.host/Portal

- removing the need for the web-frontend.

Here are some scans which were taken with a Pi4 +V2 camera but with a pretty hacked together setup using for example TMC controller in combination with an old Eora3d turntable. https://sketchfab.com/lilscan

After playing around a little bit with my old Ciclop, I saw your upgrade post for the Ciclop and ordered the FabScan shield. Now I am wondering if this path would be interesting for FabScan as well because the hardware is more or less identical.

Ideally yes, but a vsync is not supported in raw mode because it is generated on the GPU rather than the camera. However, due to the rolling shutter the benefit is rather small and you can use a free running servo and camera if you know the timing of both.

Got it.

Here are some scans which were taken with a Pi4 +V2 camera but with a pretty hacked together setup using for example TMC controller in combination with an old Eora3d turntable. https://sketchfab.com/lilscan

The results look very impressive!

Now I am wondering if this path would be interesting for FabScan as well because the hardware is more or less identical.

Sure it is interesting. Maybe we should work together. And i am still looking for help cause i am the only one who is writing the software (at the moment).

Yes, let's try to coordinate. I will put together some information how to speedup the ciclop/fabscan and post it here. This should be a good starting point for further improvements.